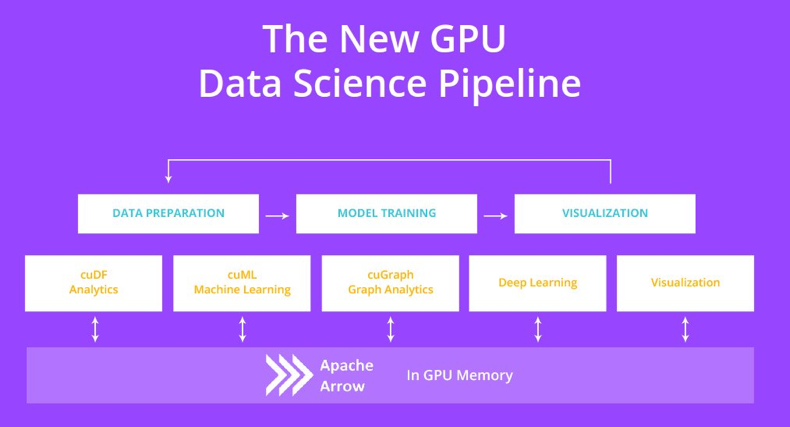

Accelerating Scikit-Image API with cuCIM: n-Dimensional Image Processing and I/O on GPUs | NVIDIA Technical Blog

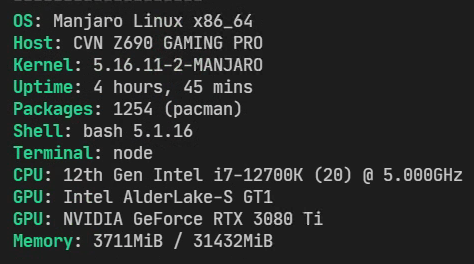

Random segfault training with scikit-learn on Intel Alder Lake CPU platform - vision - PyTorch Forums

Tensors are all you need. Speed up Inference of your scikit-learn… | by Parul Pandey | Towards Data Science

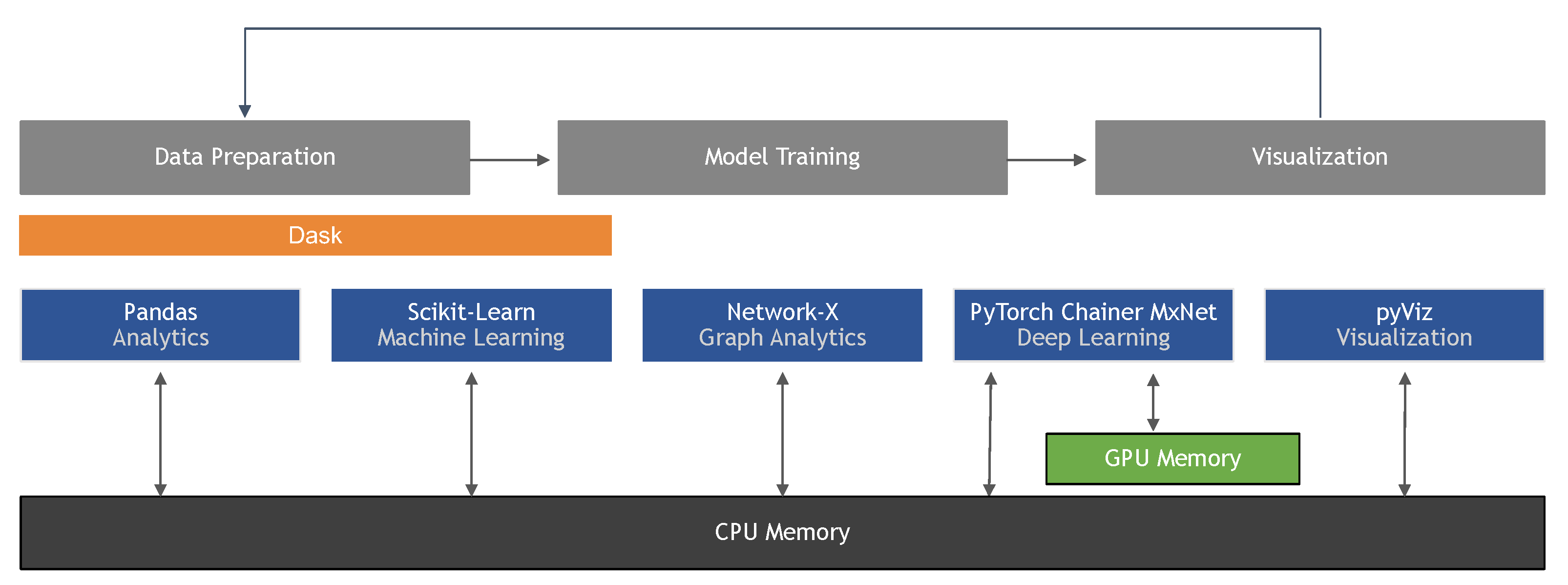

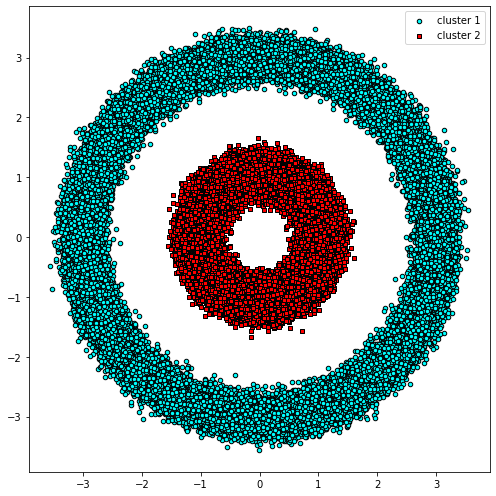

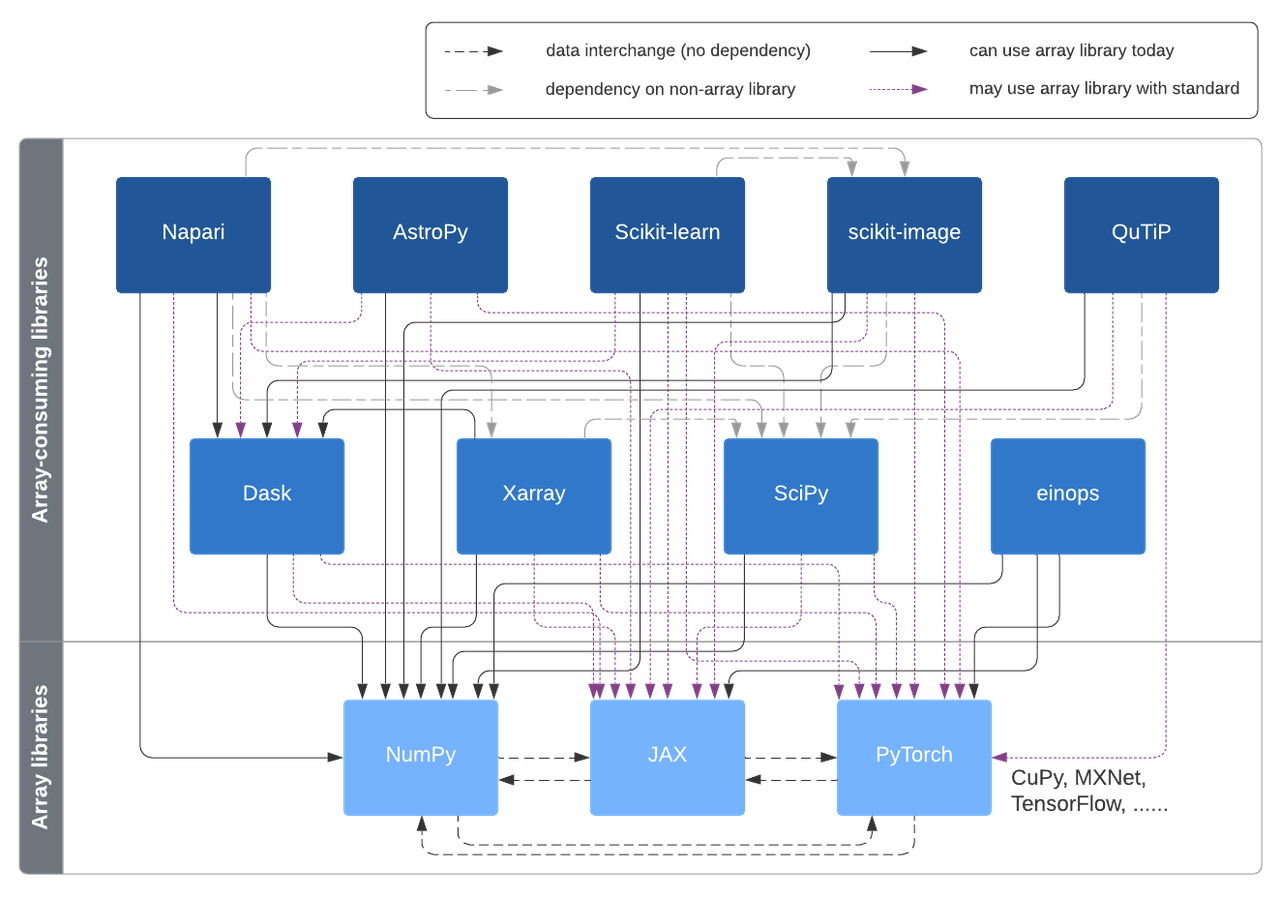

A vision for extensibility to GPU & distributed support for SciPy, scikit-learn, scikit-image and beyond | Quansight Labs

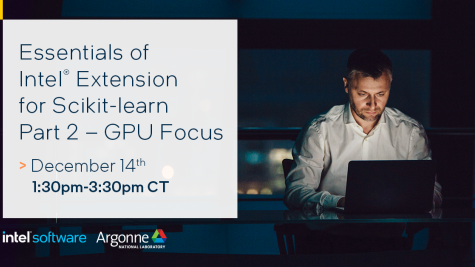

Aurora Learning Paths: Intel Extensions of Scikit-learn to Accelerate Machine Learning Frameworks | Argonne Leadership Computing Facility