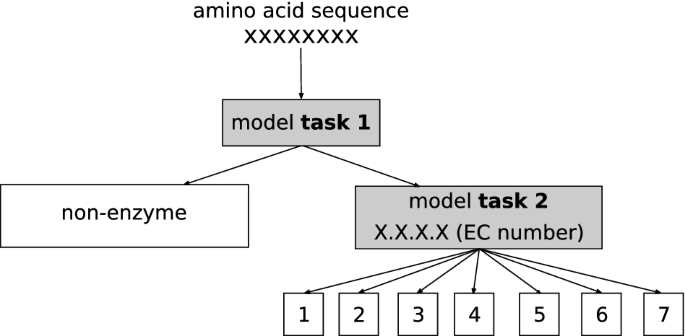

Effect of sequence padding on the performance of deep learning models in archaeal protein functional prediction | Scientific Reports

Brown Beautiful Padding Color In Heavy Vichitra Silk With Sequence Embroidery Border To Border Work With Piping With Blouse - Designer Latest Ethnic Wear For Indian Women

GitHub - b2slab/padding_benchmark: Analysis of the effect of sequence padding on the performance of a hierarchical EC number prediction task.

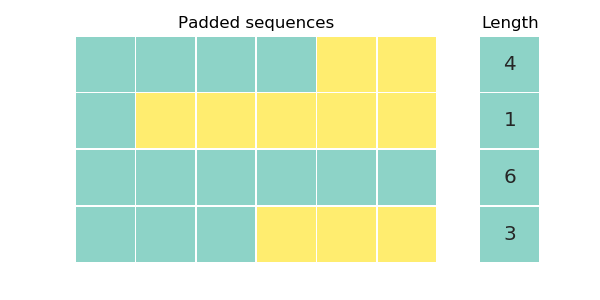

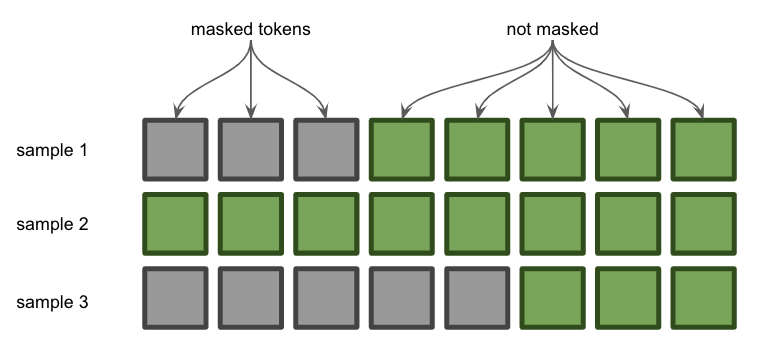

Post Sequence Padding. The values in bold are removed after truncation. | Download Scientific Diagram

Detailed process of S-padding strategy. a Protein sequence of amino... | Download Scientific Diagram

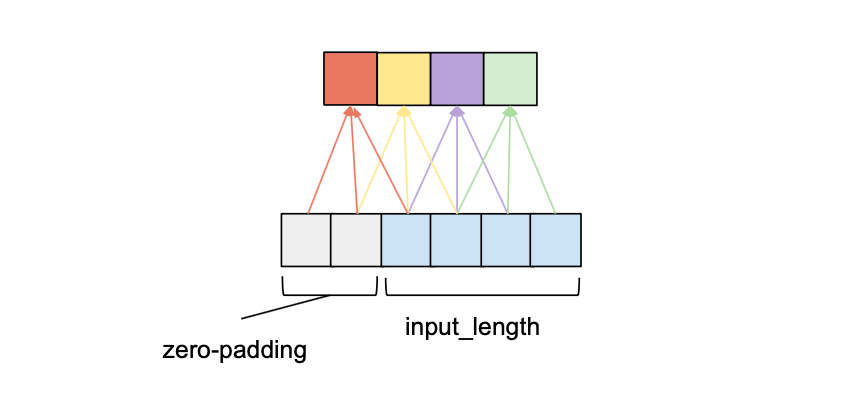

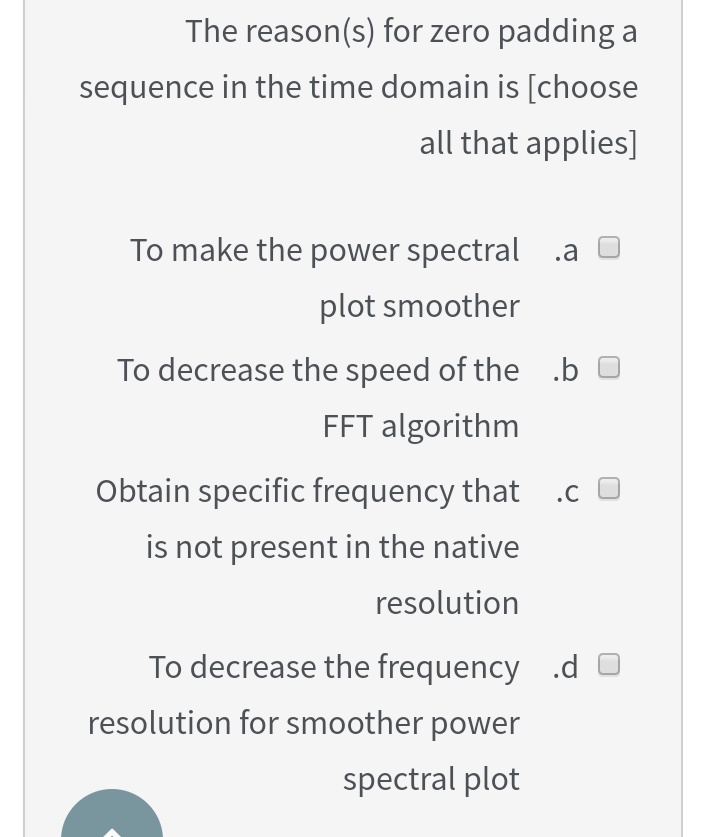

Padding Methods in Convolutional Sequence Model: An Application in Japanese Handwriting Recognition | Semantic Scholar

_nlp-with-tensorflow-and-keras-tokenizer-sequences-and-padding-preview-hqdefault.jpg)

NLP with Tensorflow and Keras. Tokenizer, Sequences and Padding from pad sequence in python Watch Video - HiFiMov.co